polytales

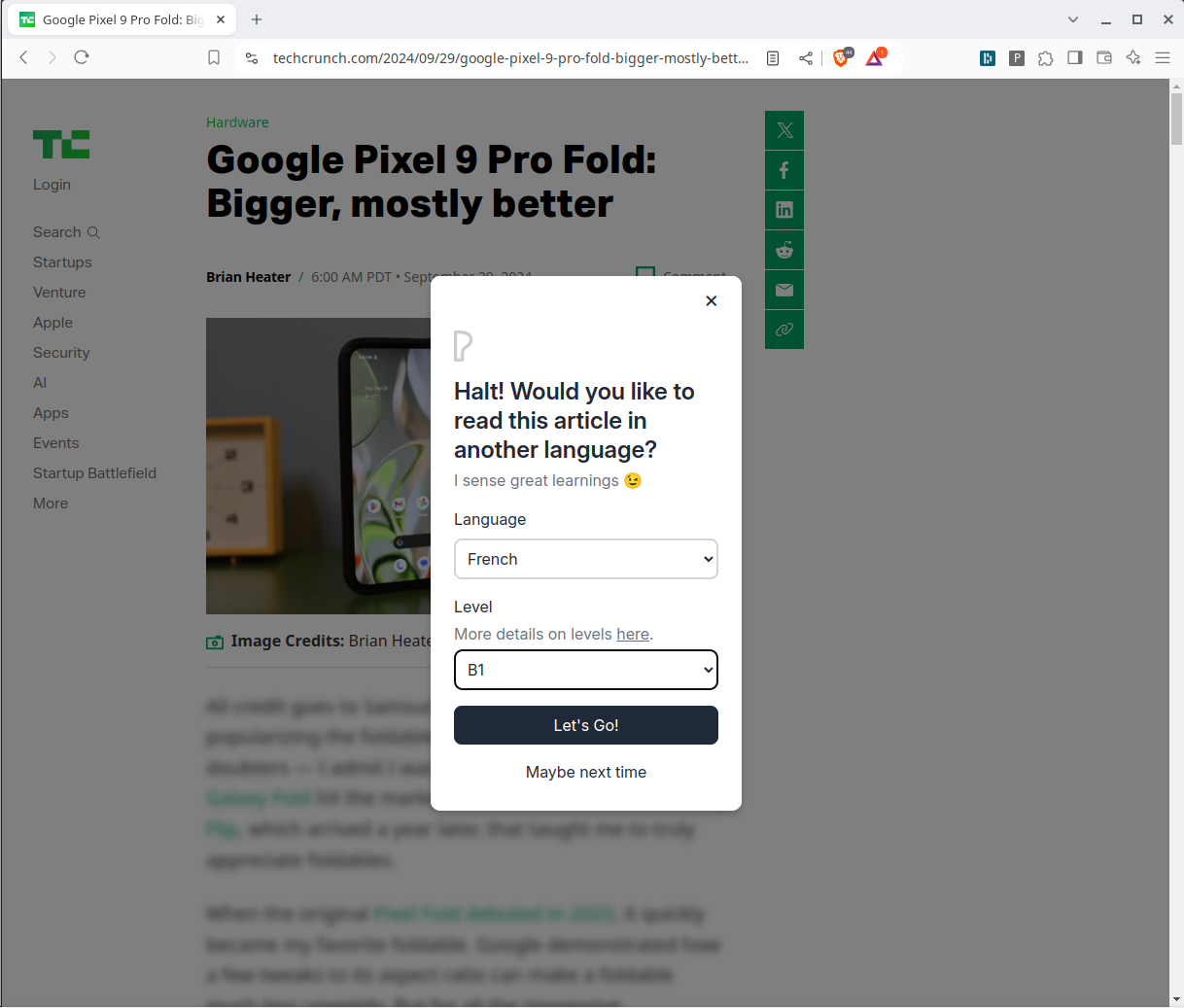

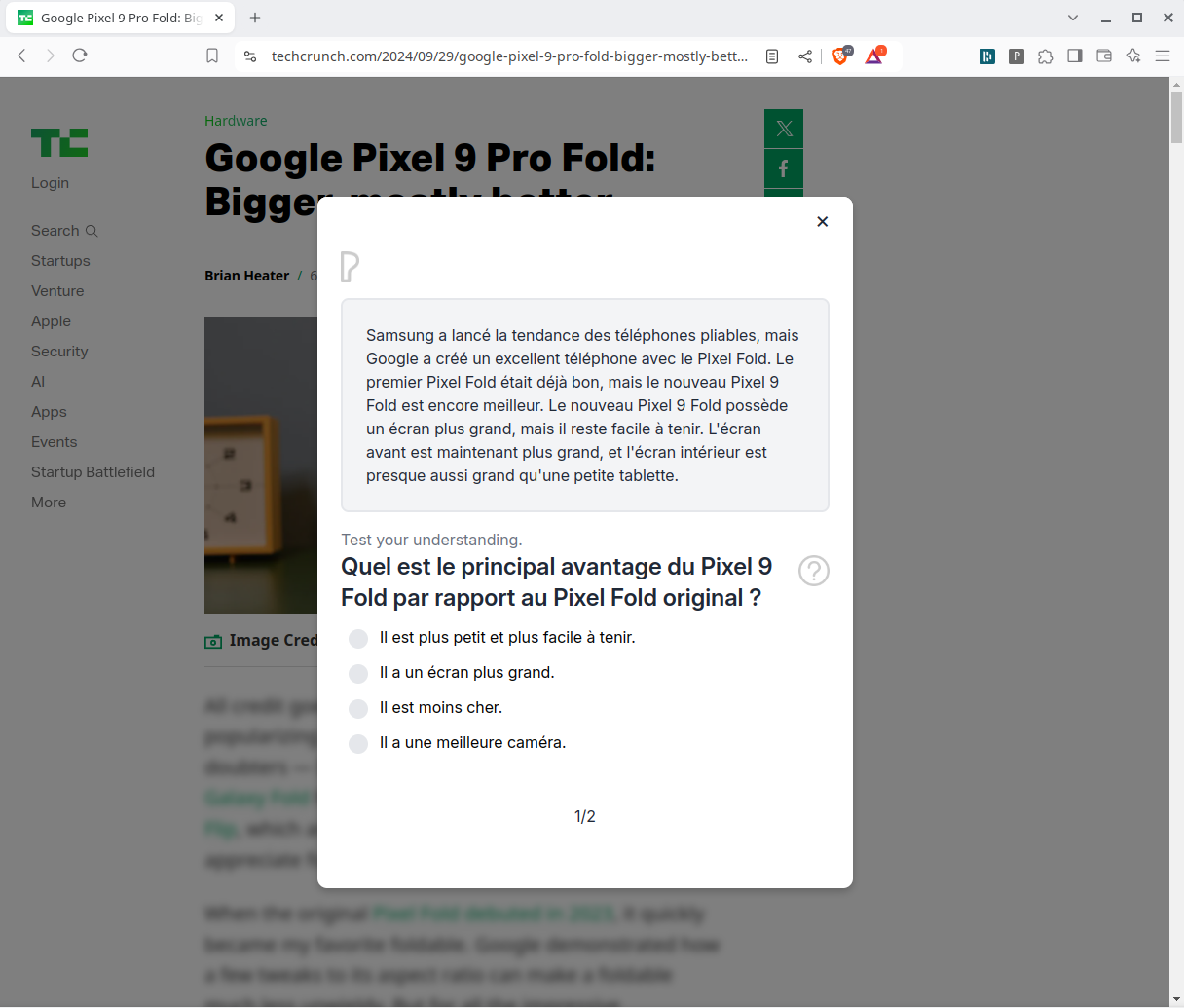

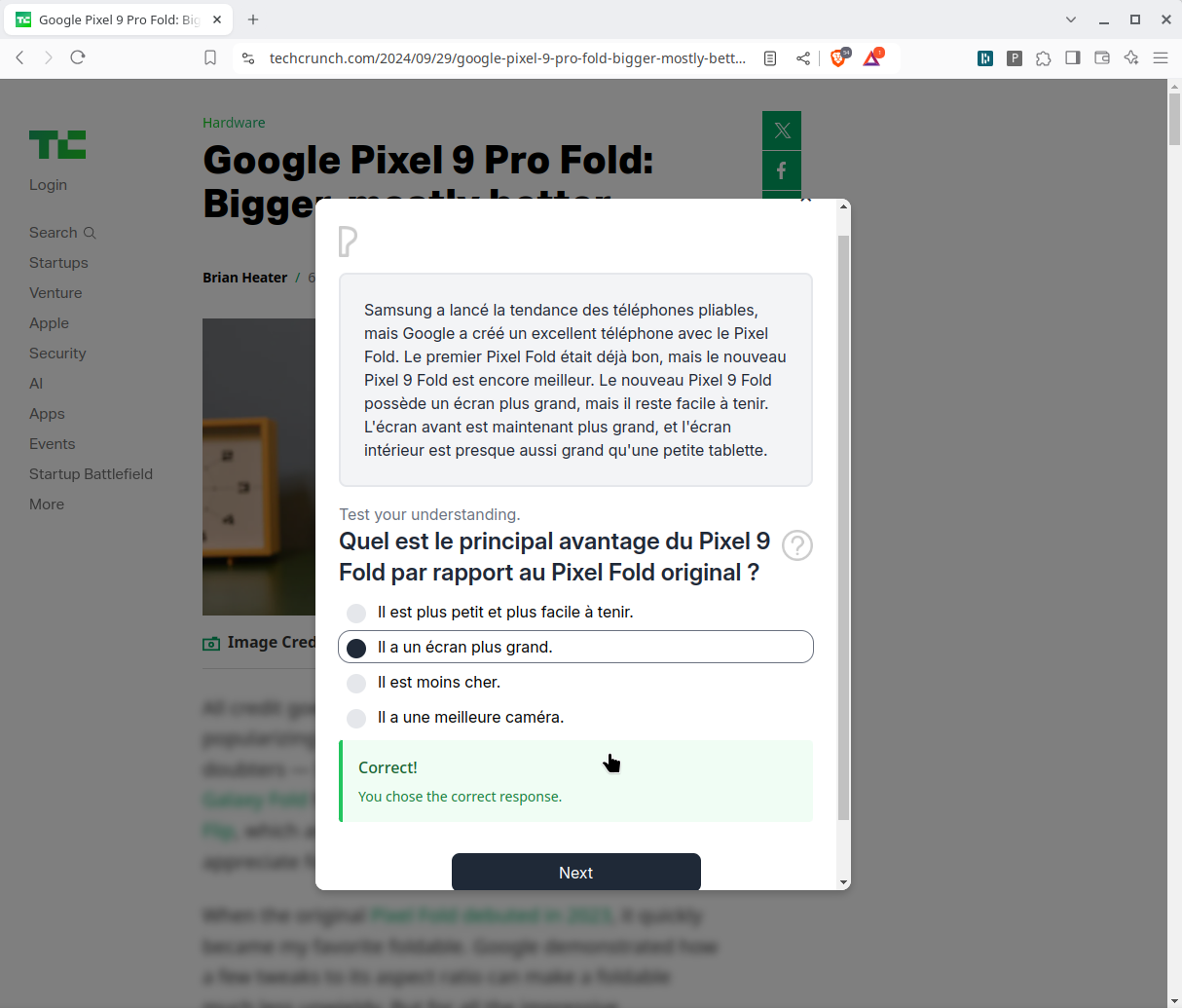

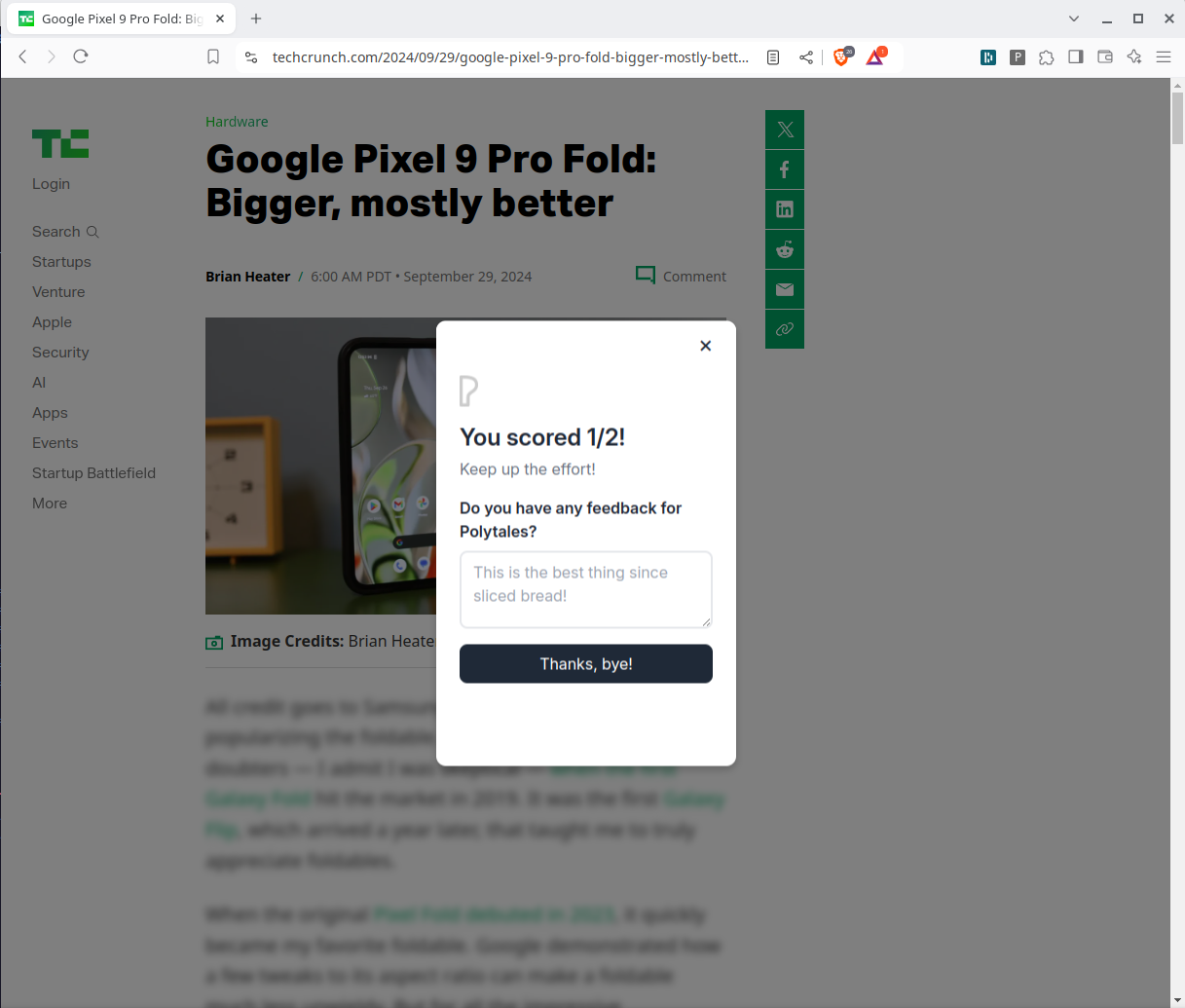

polytales is a browser extension that converts your internet content into reading practice in a target language you wish to learn at your current reading level and then tests your comprehension.

Here's version 0.3 (Sep 2024):

When I was learning Chinese, I used this app called 'Du Chinese' which had pre-set articles, labelled with different HSK levels. I wasn't too interested in the content and always wished I could just read what I wanted to but make it an opportunity to practice reading in another language. That's where I thought I could leverage LLMs to help me.

A lot of people have been using LLMs for language learning by making chatbots and I personally didn't enjoy conversing with a chatbot that much. Every conversation turns into long-winded gibberish.

My thesis for this project was based on a couple of key ideas: 1) Humans prefer to read what other humans write over what LLMs write, 2) LLMs are good at simplifying and translating, and 3) We learn languages massively through comprehensible input. So if we can produce more comprehensible input sourced from human writings and translated through LLMs, perhaps we could massively speed up reading development for language learners.

There are some flaws though, LLMs don't always know what the CEFR language levels look like and for the sake of generating its translation, it may resort to using direct translations which will have some difficult vocabulary. It is fundamentally limited to the source material.

No link yet, I'll probably release a later version.